I only have an RTX 3060, but hopefully this still works.

The FP8 workflow may work but it s a bit borderline, but the great news is there will be cloud solutions available to run your Workflow on to offset spending large amounts of money on a new GPU. This Flux 1 Dev Workflow requires 24GBs of VRAM so really targeted towards 3090 or above but I even have a test setup with fun pod that uses a 24gb A5000 for as low as 20 cent an hour. Even if your 3060 can run it you will find that it is much faster and fun when you have access to faster generations and the power of local cg driven AI pipelines. Lots of great solutions on the table to get your running the latest and greatest open source models!

Hi all,

We’ve already seen some amazing early results shared in the forum — great work, everyone! ![]()

Just a quick reminder: if you’ve created something you really like with AI Render, don’t forget to post it in the Community Challenge thread for a chance to win a free copy of CC5!

We’re still in the first entry window, which runs until August 8, so there’s plenty of time to join in. Keep creating — and keep sharing! ![]()

Gonna have to wait, Jason. I’m sure everyone is pushing the limit on the first draw to bang the hardware on the AI renderer, as long as possible. I did a couple of animation tests, but per the usual, AI messed up the hands. There’s a limited amount of draw winners, so I doubt people are going to put out less than their best. This IS A FREE copy of CC5, after all. ![]()

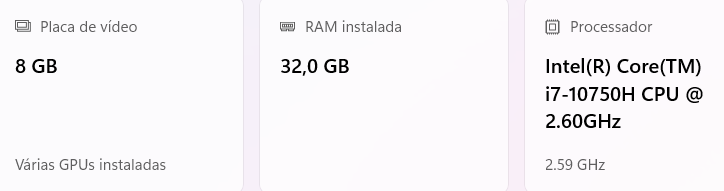

I have a question. I’m in my estate in Canada and I only have an Alienware laptop here with a GTX 1070 with 8GB of VRAM. I have upgraded the RAM to 32GB.

So will it simply not run, or run but very slowly?

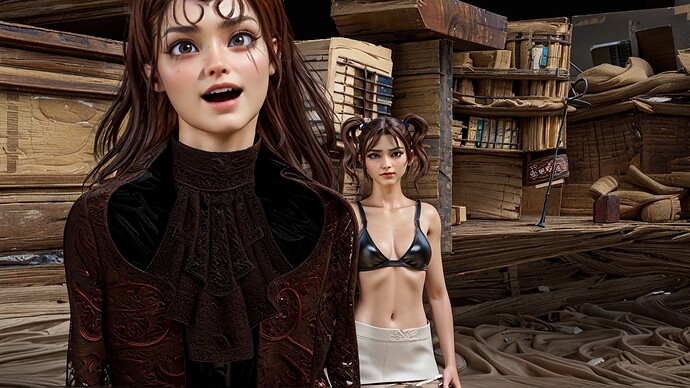

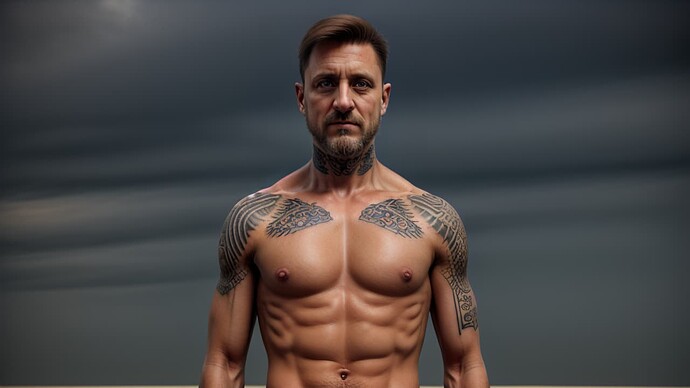

![]() Flux 1 Dev Krea + CC4 Camila + iClone Depth ControlNet =

Flux 1 Dev Krea + CC4 Camila + iClone Depth ControlNet = ![]()

![]()

![]()

Just wrapped a round of testing with Flux 1 Krea Dev and my custom CC4-trained LoRA for Camila — piped straight into our existing Flux 1Dev ComfyUI workflow with iClone powered Depth ControlNet handling pose + realism. The results? Absolutely next-gen.

![]() Images below were all rendered using the new Flux 1 Krea Dev model just released by the BFL team:

Images below were all rendered using the new Flux 1 Krea Dev model just released by the BFL team:

![]() Black Forest Labs - Frontier AI Lab

Black Forest Labs - Frontier AI Lab

This model brings sharper structure, better skin tones, refined hands, and most importantly — it plays nice with stylized LoRA characters and production-level ControlNet chains. No weird warping, no softness. Just raw cinematic clarity. And yes — it runs locally.

![]() If you’re already using Flux 1 Dev, the Krea variant slots right in. No extra config needed. Just swap and go. Perfect for those of us building real-time pipelines with LoRA-trained CC4 characters and iClone-driven animation sequences.

If you’re already using Flux 1 Dev, the Krea variant slots right in. No extra config needed. Just swap and go. Perfect for those of us building real-time pipelines with LoRA-trained CC4 characters and iClone-driven animation sequences.

![]() With this setup:

With this setup:

• Stylized LoRA + consistent character rendering ![]()

• Depth maps from iClone / scene previs ![]()

• Real-time preview + compositing in Comfy ![]()

• Open-source, local, super fast with 24gbs VRAM ![]()

This isn’t just image generation anymore. It’s open-source visual FX, and it’s evolving faster than anyone predicted.

Let’s go. ![]()

I downloaded a copy yesterday and spent the entire day playing around with it. I am the type to install and play. LOL

I am discovering so much. Like changing the render output size will change the render. Changing the animation will change the character. OMG, I rendered one scene at 720 as it would crash at 1920… and it changed the look of the character, the scene and changed the entire theme of the scene. It also removed the top of one of the characters and made the breasts so small she looked like a topless man. Kooky.

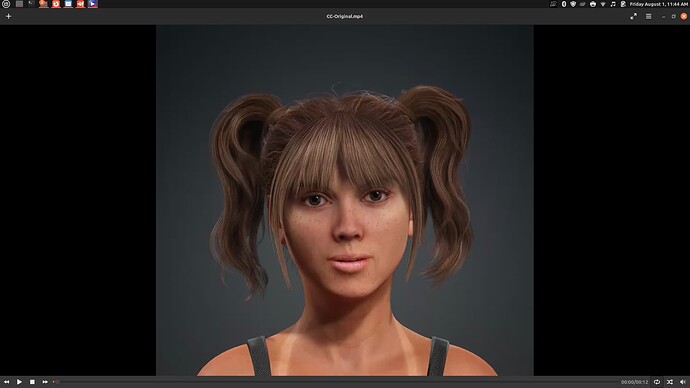

This is the 1920 render

This is what the 720 render looks like.

This is a screen-cap from iclone of the original.

Interesting changes. Same 3d toon effect, different render sizes.

In CC4 is is the same.

Original (screen cap from video I cannot upload. I forgot to do a still on this one.) This is the animation that added the tattoo to the neck. That is rendering atm. OMG, rendering is sooooo slow. LOL.

This still image was generated using the default “female talk” animation loaded.

This is the exact same render with the default “female emote” animation loaded. Nothing else changed, just the animation preset. It added a tattoo to her neck. LOL

So, using the same render size, same animation and same render. I was able to get a consistent render flow.

Original

3D Toon Render

Here is one of my toonish creations rendered with the 3D toon render.

Original

3D toon Rendered

Anyway, I am having fun playing with this. The nodes look interesting. HAHAHA. Wondering on what changing those will do. ![]()

I wonder if you can render full size videos with a paid account or purchased credits. Or maybe my video card is only strong enough for the small stuff. RTX-3060 with 12 gig ram. Basically the min required. HAHAHA. Only 2 years old. Gotta love it.

I hope this stays free when it’s out of beta. It’s a nice addition for having fun with, not sure I would use AI generated anything when doing work for someone else.

One last one… she is my favorite.

Original (not same expression but same character)

1st three from top image, 2nd two another pose of same character.

Last but not least… this is my favorite render of this model/character.

Thanks for the free beta. I am having too much fun with it. ![]()

It can be both in some cases. The larger models are very power hungry and simply can’t run on local hardware if the VRAM requirements aren’t met. However there are many solutions that make this possible to simply run iClone local on your existing hardware and run the ComfyUI side in the cloud on say like RunPod! Webinars workflows, setup guides, test LoRAs and much more coming soon to help get users ready to really push the bounds of what it possible. For some of the large video models even a 5090 will barely get it done so this is just the more economical way to run it quickly without investing thousands of dollars in a new GPU or entire computer. We have seen great success with quantized models for both Image and Video workflows. James and I have also been testing on these reduced models on a 3080 laptop and can achieve results that are very close to the full models. This is also makes it incredibly easy to scale up production by running multiple things at once so you can get more done with a smaller efficient team!

Thanks! I guess I will just try it out with what I have here and see how it goes. I think it is a fascinating development for those of us who want to stay with the RL ecosystem.

You should be able to get some of the SD1.5 stuff to run for you it just may take a while. Most of these models are highly reduced version of models that were originally intended to run on large servers powered by like H100 or H200 cards with 80-141gb VRAM so it is very cool that we are able to run them and play with them local on a consumer grade PC. Good luck with your testing!

Yeah, RL tries to use little vram, because IClone or CC needs memory too, that’s why they choose reduced models. - the more Vram you have the more you can do, using bigger models for example.

Hello all. Its great to see there’s a hope see some special on iclone renders. Imagine if we can render short films with this quality, its a dream.

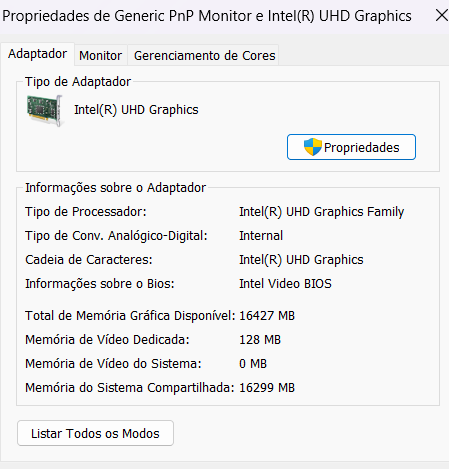

I have now a laptop with 32GB de RAM, i7 and RTX 2070, but when im going to see my sytem requirements the adpter dont show the RTX but intel(R) UHD Grafics. So, my question is, miss i something or my laptop cant try the new beta render

I don’t remember exactly how, but I believe there is a setting to enable the graphics card for laptops. There were posts about that a long time ago. Maybe someone who really knows can chime in.

Watch this video:

TLDW version: Click on the Windows button, type GPU, open the Windows graphics settings, add the executable to the list of apps, open its options and select the GPU.

Yes, they certainly do…

Tip: before hitting Render, switch viewport mode to Minimal. That would free up a considerable amount of VRAM (depending on a scene).

Thanks a lot, RL. I’ve now installed it and tested it a little, but only with images. It doesn’t seem to be suitable for videos,2K- 4K, etc. yet.

Best regards, Robert ![]()

Yep . Same for me ![]()

upscaling after generating, for the fusionx model I use 832x480 for longer videos and then upscale it. It is possible to use 1280x720 but you need a lot of Vram and it takes longer. you can also try lower res like 640x360 and then upscale it, depends on your scene. Wan2.1 ( fusionx ) has no problem with other resolutions but it is trained on 832x480 as far as I know.

For upscaling you can also look into Topaz Gigaoixel AI . I’ve had pretty good results with that in the past, although I now use it mainly for images.