That’s what I was actually thinking too using a similar analogy of Motion Blur integration. The more frames range you use, the stronger/“better” output should be.

I did not want to continue in the challenge thread…

Thank you for starter pointers. So the question is, are you using RL ComfyUI or a base from git?

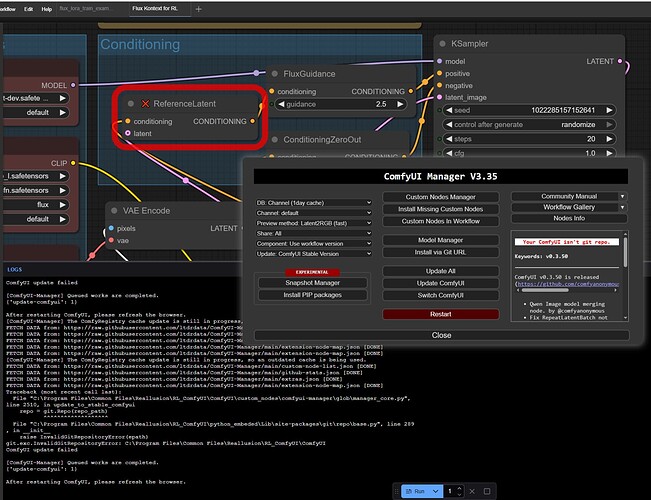

The reason I am asking is because I installed all necessary modules according to notes, but a couple of nodes were failing detection (ReferenceLatent and FluxKontextImageScale).

So it seems ComfyUI needs an update. I tried to update from manager and it failed. I suppose that message “Your ComfyUI isn’t a git repo” indicates the reason for failing to update.

I am not sure if I want to go ahead and update some “other” way and possibly break connection with RL. ![]()

Also VRAM usage… Ouch! Even fp8_scaled 20Gb - hope my 24Gb card could handle it… when(if) I can use the workflow ![]()

Yes you are right I used my own comfyui installation as I have used it for a year now and it has all the models/nodes I use most of the time.

I just plugged it to Iclone and CC using the setup from the AI render plugin.

As for Vram it all depends. When you use native loaders like it is the case here I know that comfyui can somehow fall back to your RAM (but of course this will be a lot longer on the rendering side)

Anyway if you really want to push the AI rendering to its boundaries a custom comfyui installation would be the way.

Or even going the runpod route (this is a cloud service that you can use to rent high capacity GPUs paying by the minute)

Tell me if you have issues installation comfyui and the nodes and setting it in the plugin settings I will be more than glad to help

Hi 4u2ges,

Thank you for making us aware of this problem. We’ll put it on the list to be fixed.

Oh my God, that looks very complicated.![]()

![]()

I’ll wait for the tutorials first.

I have virtually no experience with AI.

Greetings Robert

I asume that Headshot will not be developed any further ? TBH i would rather see a Headshot 3 were i can make my char exactly look like the original instead of this.

Headshot 3 is currently in development. It is not being discontinued.

Can you choose where (which drive) to install ComfyUI and/or the models? I’d like to conserve space on my system drive if possible.

Hello, gordryd

You can choose the installation location when installing AI Render. By default, the installer sets ComfyUI to be installed in CommonFiles, but you may change it to any location that is convenient for you.

Hope it works for you.

It’s practically impossible to render different genders in the same scene (moreover from the same character). So I did render them separately and composed.

Mr. and Mrs. Goblin

2 renders in iClone:

AI renders composed:

I continue experimenting with AI going deeper (without going outside of iClone into Comfy and LoRA).

This time I targeted animals. Normally it takes a few dozens of shots before I satisfied with rendering complex, multi-character project.

So I puled out an old demo project with a cat, a dog and an old gentlemen and after that much tries got this.

Settled with Film Style and tried to keep the denoise level relatively high (around 0.6) to let the AI “figure” it all out (a slight change of camera angle, lights and resolution could make a huge impact on an outcome). Fully relied on canny as pose is not generated for Humanoids. Related positive prompt as CFG 4, (no negative was specified). “Caught” the most successful seed and made it Fixed for further tweaking and testing other parameters. Also added add_detail.safetensors (which is not default in Film style) and settled with value of 2.5 to get better fur and cloth details.

In the end a dog and old gentlemen came out perfectly fine (although dogs face does not resemble a collie). Cat does also look fine, but the face resembles a dog/wolf/tiger. Maybe there is such a breed ![]() , but I think it’s like an issue with different genders in the same render. Dog’s face was projected to a cat I think.

, but I think it’s like an issue with different genders in the same render. Dog’s face was projected to a cat I think.

Anyway…

Hilarious. ![]()

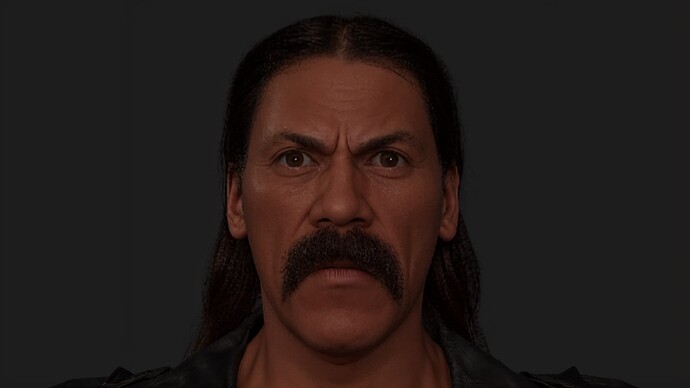

Wanted to test out the AI render on some of my celebrity similar characters on the marketplace. I like my version on most of these, but im not mad at any of them…

Hi all,

We now have cloud-GPU templates for AI Render on RunComfy & RunPod — making it easy to skip setup and run workflows directly in the cloud. They fully support our preset workflows, plus the Flux image and WAN video workflows coming in the webinars.

![]() Thanks to RunComfy, there are also special offers for Reallusion users (credits & subscription discounts).

Thanks to RunComfy, there are also special offers for Reallusion users (credits & subscription discounts).

![]() More details here: [Cloud-GPU Templates for AI Render — Powered by RunComfy & RunPod]

More details here: [Cloud-GPU Templates for AI Render — Powered by RunComfy & RunPod]

What is the highest resolution we can generate?