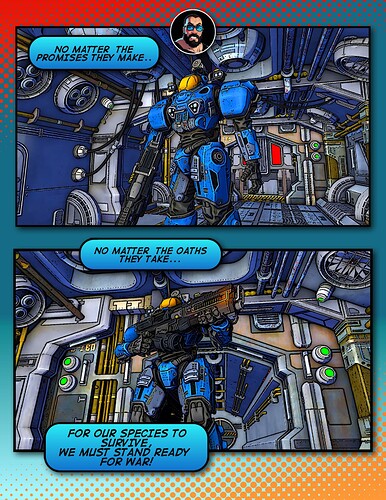

To prevent too many posts in the “iClone 8 Render Engine” thread, I’m starting this new topic in the hope that C4D and Redshift related posts can be better gathered here.

In view of the discussion about various global illumination (GI) modes in Redshift, I have run a few renders with different settings. The scene is an interior view of a diner, with the following features:

- 2+ million polys without instancing, 6+ million with

- There are a number of (practical) lights in the scene, plus a sun-and-sky rig for the exterior

- The exterior visible through the windows is mesh-based (not an HDRi)

- For the following renders, the same output size (3840x1644) and render settings were used; the only change is to the GI mode for the primary and secondary method

- The render settings include post-render denoising with OptiX (built into RS); there is also a little application of RS post effects. No further post work outside of C4D

- All render times are in seconds on a single RTX4090

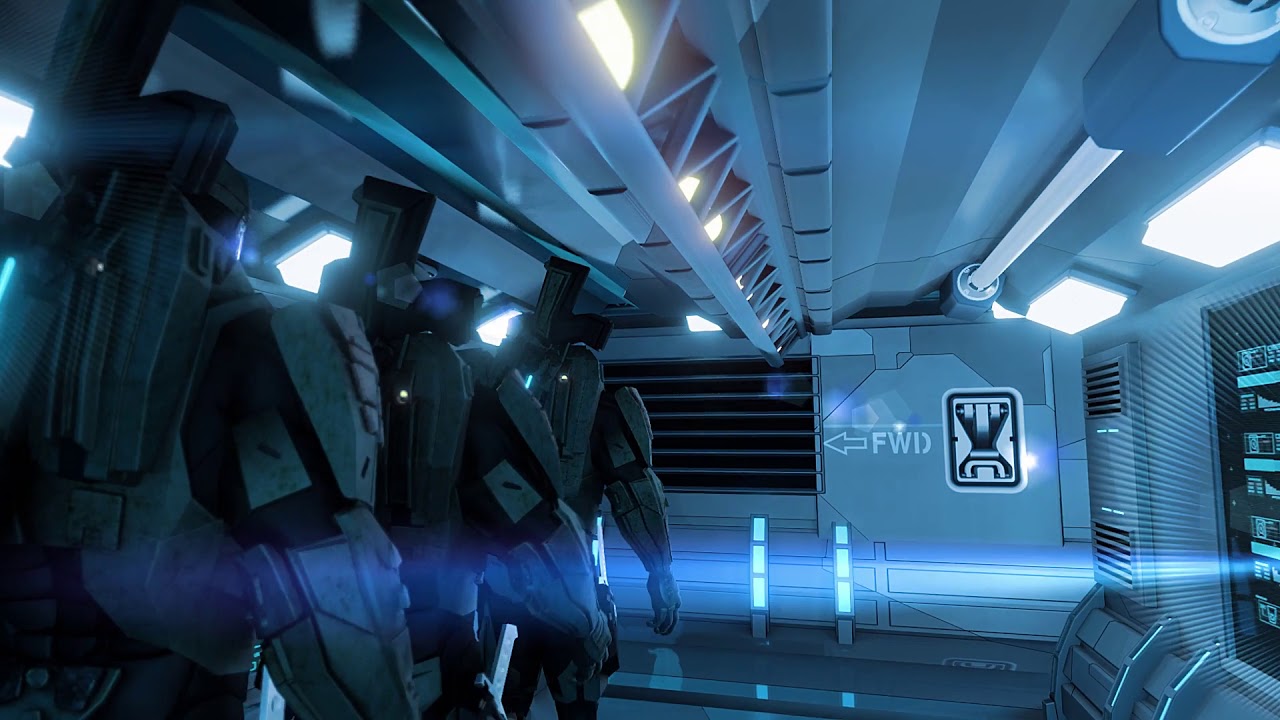

Image #1, No GI, 84 seconds

Image #2, Brute Force for primary and secondary method, 149 seconds

Image #3, Brute Force for primary, Irradiance Point Cloud for secondary, 242 seconds

Image #4, Irradiance Cache for primary, Irradiance Cache Point Cloud for secondary, 296 seconds (i.e., almost 5 minutes)

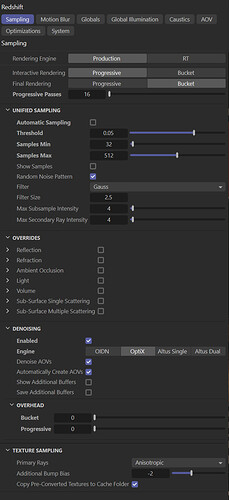

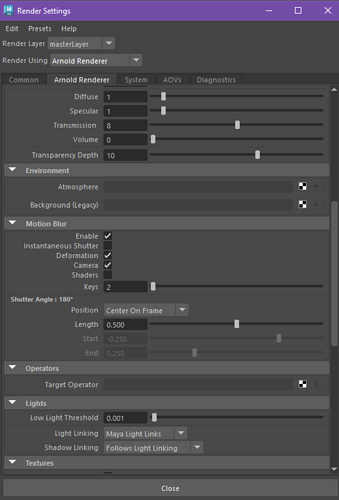

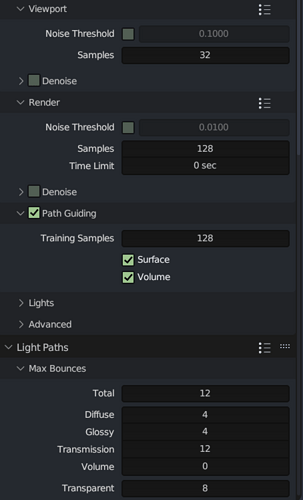

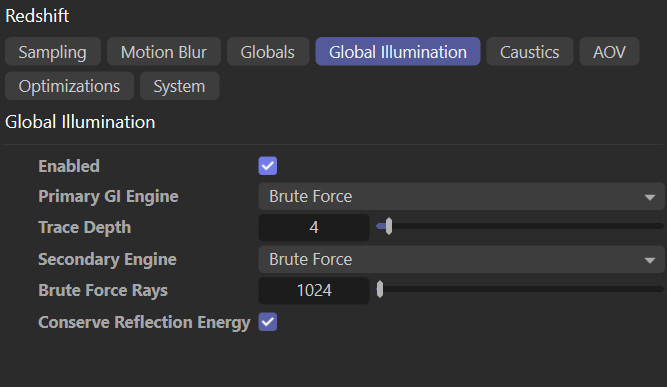

Render settings:

Conclusions:

- No GI is obviously the fastest, but also looks the “worst” (relatively speaking)

- Brute Force for primary and secondary GI beats any other combination of GI methods for THIS scene hands down