Hi,

I am developing a plug-in to receive our full-body motion capture data to control an avatar in iClone 8. I referenced the IC8 Python API: Modules and part of my code is shown below:

self.body_device = manager.AddBodyDevice(self.avatar_name + “_BodyDevice”)

self.body_device.AddAvatar(self.avatar)

self.body_device.SetEnable(self.avatar, True)

body_setting = self.body_device.GetBodySetting(self.avatar)

body_setting.SetReferenceAvatar(self.avatar)

body_setting.SetActivePart(RLPy.EBodyActivePart_FullBody)

body_setting.SetMotionApplyMode(RLPy.EMotionApplyMode_ReferenceToCoordinate)

self.body_device.SetBodySetting(self.avatar, body_setting)

device_setting = self.body_device.GetDeviceSetting()

device_setting.SetCoordinateOffset(0, [0, 0, 0])

position_setting = device_setting.GetPositionSetting()

rotation_setting = device_setting.GetRotationSetting()

rotation_setting.SetType(RLPy.ERotationType_Quaternion)

rotation_setting.SetQuaternionOrder(RLPy.EQuaternionOrder_XYZW)

rotation_setting.SetCoordinateSpace(RLPy.ECoordinateSpace_Local)

position_setting.SetUnit(RLPy.EPositionUnit_Meters)

position_setting.SetCoordinateSpace(RLPy.ECoordinateSpace_Local)

and initialize the bones :

self.body_device.Initialize(self.bone_list)

self.body_device.SetTPoseData(self.avatar, self.naturel_pose_data)

finally update the bones:

self.body_device.ProcessData(0, self.now_pose_data)

I encountered two problems:

-

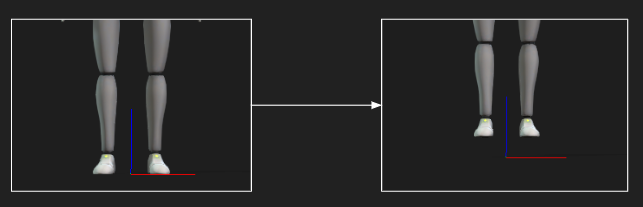

after processing the data, my avatar shifts upward by an offset, as shown in the attached image. Even if I don’t update the now_pose_data, it still happens.

-

If I move the avatar to a specific position using the gizmo, it snaps back to the world center after processing the mocap data. Could this be caused by the EMotionApplyMode_ReferenceToCoordinate flag?

When I switch to EMotionApplyMode_ReferenceToAvatar, the avatar stays where I placed it, but the rotation becomes incorrect: some avatars rotate 90 degrees around the Z-axis, while others rotate 135 degrees (and so on).

Do I need to convert the mocap hip bone’s rotation data into a specific coordinate system when using EMotionApplyMode_ReferenceToAvatar? The data I’m using is the local transform relative to its parent. In a simple case, the hip’s parent is the avatar’s base object, but the avatar still does not maintain its original facing direction.

I want to keep the avatar at the specific position and rotation I set and start the mocap from that orientation. How can I do this correctly? Are there any sample code or examples available?

Thanks,

B.R

Brian

Hi Brian,

You can find the Mocap API sample code here:

This is the documentation for the Mocap sample:

Hope this helps. Thanks

Johnny

Hi, Johnny.

Thanks for your reply.

I have checked the sample code and the documentation you provided above, but they don’t seem to solve the problems I am facing. I can control the avatar with our mocap device correctly, except for the following two issues:

- Upward offset issue

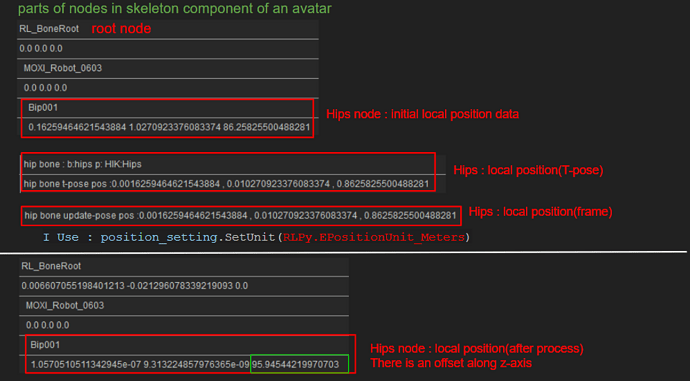

The image shows the local position data I used for setting the T-pose and processing.

I also printed the local position of the hip bone. After processing the data, we can see an offset compared to its initial state. (The motion data I used are in meters.)

As a result, the avatar is lifted by approximately 10 centimeters from the floor.

- How to make the avatar start mocap at a specific position and rotation?

Should I use the API:

SetMotionApplyMode(RLPy.EMotionApplyMode_ReferenceToAvatar)?

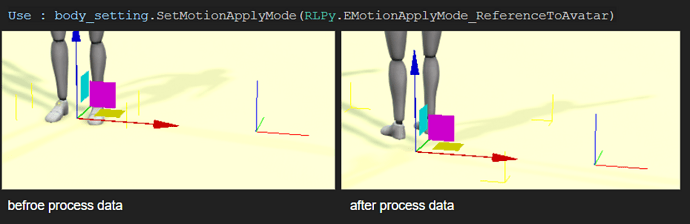

At first, I used RLPy.EMotionApplyMode_ReferenceToCoordinate, but it seems to automatically reset the avatar to the world center. However, when I use ReferenceToAvatar, I encounter the problem shown in the image below.

The avatar remains at the position I set, but its rotation becomes incorrect after processing the data. Do I need to do anything extra when using this mode?

Thanks

B.R

Brian.

Hi, Brian.

- Upward offset issue

Regarding this issue, it looks like your input data is correct. We may need you to provide a sample code so we can clarify the problem. Of course, you can directly call the following API to subtract the offset:

body_device.GetBodySetting(avatar).SetCoordinateOffset(rot, pos)

- How to make the avatar start mocap at a specific position and rotation?

About the parameter settings of SetMotionApplyMode():

If you still encounter unexpected character orientation when using RLPy.EMotionApplyMode_ReferenceToAvatar, it is likely caused by a mismatch between your character’s facing direction and the world coordinate orientation. You can call the following API to correct the character’s orientation:

init_hip_rot_matrix = RLPy.RMatrix3(RLPy.RMatrix3.IDENTITY)

init_hip_rot_matrix.RotationZ(rot)

device_setting = body_device.GetDeviceSetting()

device_setting.SetInitialHipRotation(init_hip_rot_matrix)

I hope this information is helpful. Thank you.

B.R

Johnny

Hi Johnny.

Thanks for your reply.

Here are two questions :

1.Regarding using SetCoordinateOffset(rot, pos) to solve the offset issue:

How do I get the offset first? The offset problem occurs after this step:

self.body_device.ProcessData(0, self.now_pose_data)

So, should I get the offset after processing the data for the first time and then call SetCoordinateOffset?

2.About using the RLPy.EMotionApplyMode_ReferenceToAvatar mode.

init_hip_rot_matrix = RLPy.RMatrix3(RLPy.RMatrix3.IDENTITY)

init_hip_rot_matrix.RotationZ(rot)

device_setting = body_device.GetDeviceSetting()

device_setting.SetInitialHipRotation(init_hip_rot_matrix)

In the step init_hip_rot_matrix.RotationZ(rot), what value should be assigned to the rot rotation parameter? Should I get it from the hip’s local transform, or from some other data?

Also, the incorrect rotation occurs after processing the data.

So, should I get or calculate this value after processing the data for the first time?

And how about using the offset function for the issue?

‘body_device.GetBodySetting(avatar).SetCoordinateOffset(rot, pos)’

Here is the part of the code I used in my plugin to update the avatar via the API.

# mocap manager

mocap_manager = RLPy.RGlobal.GetMocapManager()

# get avatar

_mocap_avatar = []

avatar_list = RLPy.RScene.GetAvatars()

if len( avatar_list ) <= 0:

return _mocap_avatar

for avatar in avatar_list:

data = MiAvatar(avatar)

mocap_avatar.append(data)

# setting

self.body_device = manager.AddBodyDevice(self.avatar_name + "_BodyDevice")

self.body_device.AddAvatar(self.avatar)

self.body_device.SetEnable(self.avatar, True)

body_setting = self.body_device.GetBodySetting(self.avatar)

body_setting.SetReferenceAvatar(self.avatar)

body_setting.SetActivePart(RLPy.EBodyActivePart_FullBody)

body_setting.SetMotionApplyMode(RLPy.EMotionApplyMode_ReferenceToCoordinate)

# body_setting.SetMotionApplyMode(RLPy.EMotionApplyMode_ReferenceToAvatar)

self.body_device.SetBodySetting(self.avatar, body_setting)

device_setting = self.body_device.GetDeviceSetting()

device_setting.SetMocapCoordinate(RLPy.ECoordinateAxis_Z, RLPy.ECoordinateAxis_NegativeY, RLPy.ECoordinateSystem_RightHand)

device_setting.SetCoordinateOffset(0, [0, 0, 0])

position_setting = device_setting.GetPositionSetting()

rotation_setting = device_setting.GetRotationSetting()

rotation_setting.SetType(RLPy.ERotationType_Quaternion)

rotation_setting.SetQuaternionOrder(RLPy.EQuaternionOrder_XYZW)

rotation_setting.SetCoordinateSpace(RLPy.ECoordinateSpace_Local)

position_setting.SetUnit(RLPy.EPositionUnit_Meters)

position_setting.SetCoordinateSpace(RLPy.ECoordinateSpace_Local)

# intialize bones

self.body_device.Initialize(self.bone_list)

self.body_device.SetTPoseData(self.avatar, self.naturel_pose_data)

# process data in timer

self.body_device.ProcessData(0, self.now_pose_data)

Thanks,

B.R

Brian.

Hi Johnny,

Thanks for your help.

I tried to get the hip upward offset by using the T-pose data as input when calling the ProcessData function for the first time, and then applied this offset as a parameter for the API:

body_device.GetBodySetting(avatar).SetCoordinateOffset(rot, pos)

Finally, the upward offset problem has been solved!

Regarding RLPy.EMotionApplyMode_ReferenceToAvatar and the sample code:

init_hip_rot_matrix = RLPy.RMatrix3(RLPy.RMatrix3.IDENTITY)

init_hip_rot_matrix.RotationZ(rot)

device_setting = body_device.GetDeviceSetting()

device_setting.SetInitialHipRotation(init_hip_rot_matrix)

After calling SetInitialHipRotation, I encountered the issue shown in the image below.

It seems another problem needs to be solved when using the

SetInitialHipRotation API.

After some testing, I eventually solved the facing direction issue by using the rot parameter in body_setting.SetCoordinateOffset(rot, pos).

Thank you again for your reply and support.

Best regards,

Brian

Hi Brian

I’m thrilled to hear that all the issues you faced have been resolved!

Honestly, I only lent a small hand—your hard work made it all happen!

Wishing your project smooth progress and the best of luck!

B.R

Johnny