How do I change the location of the big database? When I try, I get an error saying files are missing. I assume I have to copy some over to my new location? Please explain, thanks

Well, maybe RL needs to integrate something like Framepack in the future. And maybe in a half a year, a year, or two it would shape up into something decent. But for now, in its current state this plugin it is no more than a tease preview to iClone movie makers.

Problem is that base Framepack has no Lora or Controlnet support. It only supports a starting image and prompt. So you don’t have any control on camera movement or character movement.

These are all brand new experimental technologies.

Doesn’t seem to like cybereyes. ![]() I couldn’t get it to do anything emissive, unlike the iRay render on the left. It’s still a good replacement for the default renderer, though.

I couldn’t get it to do anything emissive, unlike the iRay render on the left. It’s still a good replacement for the default renderer, though.

Hello @StyleMarshal , I already use ComfyUI with fantacy pluging with multitalk and a few different tts, how did you get the lipsync with this new AI workflow integration. You you set up the entire setup with the lipsync in IC and then it brought them together?

07/29/2025

Reallusion, Thank you very much in your Beta level of your plugin process with Comfyui.

I have been working with Comfyui for about a year now and I feel your are making a very wize marketing move to leverage the Character and Motion animation strenghts of your 3d products to allow for a variety of image generations styles.

I have always appreciated the styling like, Demon Slayer, Arcane as two examples.

With this ability with the character brought into iclone and animations prepared and rendered out with a 2d style frames this opens up many additional opportunities for Reallusion 3d productions.

Thank you to all the engineers, documentation staff and all other staff developing this feature.

Eric ![]()

Neither iClone or CC loaded for me despite multiple installs.

I have both required versions of CC and iClone installed, and my machine specs are beyond the requirements. I do already have ComfyUI installed for other things.

How can I troubleshoot?

Is there a limit of what you can render out in the videos? Ie, 5 to 10 seconds is usually the norm but one above mightve been much longer.

Hi Dream_Think_Lab,

After launching iClone 8 or Character Creator 4, you can go to the top menu and select Script > Console Log to check for any error messages.

Please take a screenshot of any error messages that appear and share it with us we’ll help you troubleshoot the issue.

So I made some digging while watching the command prompt closely. So yes, it indeed does load the entire set of frames into VRAM for iterations. Now questions… (as this is all new to me I might be asking dumb questions).

What is the point of loading the entire set uncompressed from compressed MP4?

It does iterate the entire set sequentially. Why is that necessary? Can there be a separate option to load one frame, finish it and go to the next, thus saving tons of VRAM? In other words Render it like an image sequence. And have an option to render source data as image sequence as well.

I only need an improvement in render quality, nothing else. Setting AI involvement to .2 - .3 is probably sufficient enough and given there is a pose clip to control characters, there should not be much deviations between separately rendered frames. Or would there?

With AI, you have to basically spell it out. If you want glowing eyes, type that into the text prompt, and be very specific. In your case, something like “glowing iris, black sclera”, and hope the AI model you have loaded understands these keywords. Some AI models understand better these things than others, depending on how they were trained. Model training consists of associating things in the image dataset with keywords (this is called “tagging”), so how well it will respond to specific keywords depends on what tags were used in the training.

Therefore, if my suggested keywords don’t work with your character, it doesn’t mean all AI models won’t, but instead, that this one specifically (the one you are using) doesn’t. Sometimes it’s a matter of finding what AI models work best with the things you prompt for. ^^

One thing that kind of bugs me here is that RL chose to use the ancient Stable Diffusion 1.5, which I consider obsolete by nowadays standards, and produces poor results in general when compared to more modern options (like SDXL). But I understand they picked SD 1.5 because it has smaller files in comparison, so it’s easier (lighter) to distribute. I use SDXL almost exclusively, and it’s not even the latest and greatest, but it offers great cost x benefit when it comes to size vs quality. ^^

Hope this helps. ![]()

After lowering down an FPS from 24 to 16 and shortening the clip I was able to render with 720p.

Photo-realism mode, no prompts other than defaults (it would probably not work anyway as AI creativity was set to 0.2), Fast 12 iterations mode. Took a little over an hour to render.

Here is an iClone vs AI animation render.

One would rather expect pocks through in iClone, but here we have AI messed up with skirt and a cloak. Although overall AI generated/tweaked cloak physics is better than iClone.

Lip-sinking is almost as good, but eyes are not so. Small eyes and mid-range would probably contributed to AI confusion there. Maybe cranking iterations would have given better results…

Hi dpolcino

Since AI Render is still in beta,

To ensure the default path is correctly registered in the system, we recommend uninstalling it first via Apps & Features in Windows Settings. Then, reinstall it and make sure to select a suitable location with enough storage space for ComfyUI during setup.

Please note that any previously downloaded or manually added models will not be deleted. You can move them to the new location after reinstallation.

To my understanding, that’s how the model architecture was built and trained.

Similar to Stable Diffusion, which recreates a single image backwards from noise and the prompt, the idea behind video models is also to recreate an image from noise. But in that case, the image is all video frames placed next to each other in a giant texture sheet, for example for 49 frames it’s a grid of 7x7 images.

I am using a more advanced Comfy version with very strong Wan models with all speed-ups ( triton, sage attention, lightxv2 ), (now testing Wan2.2).

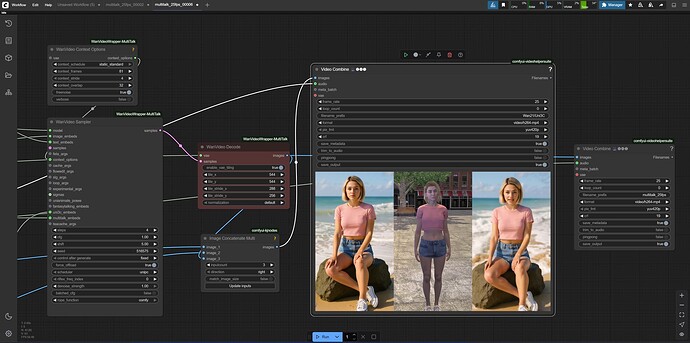

I created the image, poses and Camera motion in IC-AI and used it as reference for the final generation. Nearby lipsync works very well with the pose CN (controlnet), for more far away models I use MultiTalk in combination with the Kijai WanVideoWrapper. - So the first 5sec was generated via prompting, then used the last image of the first generation as reference for MultiTalk to generate the lipsync ( 13sec ), all automated in Comfy. With the help of context options you can generate up to 1min videos in one go. But as I said it is very advanced. I love the Iclone AI plugin for controlling the camera motion and animations/poses. The 18sec video above was generated in 15min, 720 : 1280 pixels. To control the camera with IC you can use the uni3c node in the wrapper.

IClone AI Render beta is just starting and Reallusion made the integration easy to use and understandable, updates and more nodes will follow…as I said AI Dev is STRONG ![]() hope I could help a little bit…

hope I could help a little bit…

I personally render less and generate more and more.

I still haven’t downloaded this and given it a try, but I saw that it mentions that it needs to install comfyui in the background.

Are there any options for people that already have comfyui installed to forego this?

It would also be really cool to expose the workflow so that we can customize it ourselves in comfy

I did it with “glowing red iris in right eye” in the prompt but still no joy. I’m not a fan of AI, as it puts people like me out of work. The realism is fairly nifty, though. The image, itself, could be better, but I haven’t done a high poly sculpt of the character yet. Been too focused on zbrush sculpts for cybereyes that should make it to the marketplace in a month or so.

Thanks, Melvin. It is not showing up at all. All paths are default.

Here is a video of the install and results…

https://www.dropbox.com/scl/fi/9dn7m5w2lpwvh9pa2d1bd/Install-CC-AI.mkv?rlkey=zs8h9t2c8o5jkko426wlgrjj8&st=xlbppqjp&dl=0

Log:

CC/iC Blender Pipeline Plugin: Initialize

using Blender Executable Path: C:\Program Files\Blender Foundation\Blender 4.4\blender.exe

Using Datalink Folder: C:\Users\ts\Documents\Reallusion\DataLink

I have a question

Now that we have ‘patched’ iclone for it to use ai render

How is iclone going to updave via the HUB??

Or it will update as normal??

Thank you in advance

Cheers