I made this auto processing tool for blender for retargeting audio2face motions from iclone, assuming you went through the A2F process of putting the audio exporting the json files and using the A2F plugin in Iclone and exporting the motions etc,

when importing to blender using the CC plugin and retargeting for the motion to work correctly you need to retarget the shapekey on the body, the retargeter, is relentless though, it retargets everything yo have on the CC4 mesh,

so for example if you have a clothing item and an accessory and shoes, etc, you’ll find retargeted motion for each item on you character, of course the only 2 motions you need for the speech to work are the rig action and the shapekey body action, and if you don’t name them immediately and delete the rest, it can get real messy real quick especially if you’re retargeting in batch for big cinematic scenes where character have a lot of dialogue,

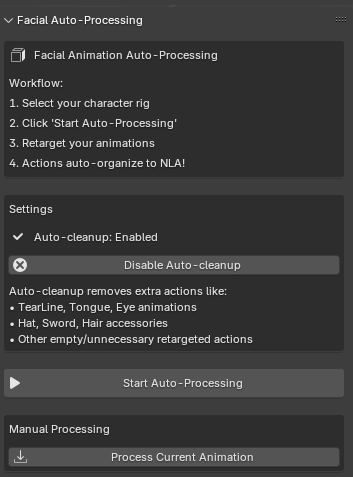

so that’s where the addon I made comes in handy I started by making it manual by renaming them to “charactername_RA_speech_01” RA for rig action and it does the same for the shape key action but adds SA

basically one for the rig and another for the mesh body then I push them down on the NLA, then then delete the unwanted action then rince and repeat for the next dialogue but now I add 02 at the end, oh and I need to delete the bdy bone keyframes from the speech because I use layer and I don’t I just want my dialogue frame to well have keyframes on the face and head (since A2F adds a bit of head movement)

the processor does that instantly each time I retarget and adds increments when it detects a new action based on the id on the character and if I start retargeting a new character (based on the id) it knows and start 01 again, and it push to the NLA and deletes bones basically what took me a month back then takes me 10 min now,

just thought I would share my process, thanks for reading